Computing Power Can Get You Out of Some Tight Sampling Spots

By Peter Lance, PhD, MEASURE Evaluation

MEASURE Evaluation conducts all kinds of surveys to obtain estimates representative at a population level. Weights are often applied during estimation to bend the information contribution of observations back toward their true population share. An observation’s weight is simply the inverse of its probability of selection, which typically can be computed with basic formulas. But what if that’s not possible? In those instances, we have found solutions by relying on raw computing power.

MEASURE Evaluation conducts all kinds of surveys to obtain estimates representative at a population level. Weights are often applied during estimation to bend the information contribution of observations back toward their true population share. An observation’s weight is simply the inverse of its probability of selection, which typically can be computed with basic formulas. But what if that’s not possible? In those instances, we have found solutions by relying on raw computing power.

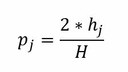

First, a little background. Consider selecting a sample of clusters with the probability of selection for each cluster being proportional to its size. This means that clusters that are bigger, usually in terms of the number of households in them, have a higher probability of selection. Suppose that cluster j has households within it, and there are H households across the clusters from among which we select our sample. If you select just one cluster, cluster j’s selection probability is

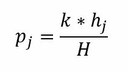

If two clusters were selected, then j’s selection probability would be twice as big:

More generally, if k clusters are selected, j’s selection probability is

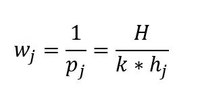

The weight associated with cluster j is then just the inverse of that probability of selection:

This weight was simple to compute because the probability of selection was so easy to model mathematically.

Sometimes there isn’t a simple formula for the probability of selection. This challenge emerged in a recent survey to support the impact evaluation of an orphans and vulnerable children (OVC) program in Rwanda funded by the United States Agency for International Development and the United States President’s Emergency Fund for AIDS Relief. For that survey, clusters were to be selected, following which households eligible for the program within selected clusters were to be interviewed. Our goal was to select a sample of clusters that would contain predetermined number of eligible households. We thought this would be straightforward since we expected most clusters to have a large number of eligible households. As usual, funding was tight!

When MEASURE Evaluation received the list of eligible households in each cluster, we discovered a serious problem: many clusters had few eligible households, and a few clusters had many of them. The frequency of clusters with few eligible households might have forced us to select many, many clusters to achieve our target sample size of households. This was simply not feasible, given our limited budget and the high cost for travel to visit clusters.

We first decided to stratify selection. We selected a very large proportion of clusters that had many eligible households, in the process getting as much sample as we could out of these few big clusters. Then we selected a smaller proportion of the clusters with few eligible households. However, these small clusters ranged in size from tiny (with as few one participant household) to merely small. So random selection can result in way too many tiny clusters being selected, implying that we will fall far short of the target number of households being selected, or too many modestly small ones being selected, implying we might actually overshoot, which we could not afford either.

Indeed, we had to repeatedly perform this stratified selection process until the expected number of eligible households selected was tolerably close to our target sample size. However, there is no straightforward formula for the selection probability of each cluster when you keep re-selecting until you get the sample size you need. The reason is that there are too many ways that a cluster can be selected: a potentially huge number of sequences of selections could result in a cluster ultimately being selected.

Our solution was to harness the abundant available computing power by instead simulating the probabilities of selection. The idea behind this is elegantly simple: repeat many times this complex selection process (where we re-select until the sample size has roughly reached the target number of eligible households). The proportion of the repetitions in which a cluster is ultimately selected is, then, that cluster’s probability of selection. For instance, if we repeat the selection process 100,000 times, the number of times any one cluster was selected across those 100,000 replications, divided by 100,000, is a fairly accurate measure of its probability of selection. The number 100,000 is rather arbitrarily chosen. In general, the more times you replicate the more accurate the probability because you capture more and more selection sequences that might occur and get a better handle on the relative frequency of these selection sequences.

The attractiveness of this approach is that it does not require one to mathematically model the actual probability of selection, which is often a tough job. Rather, you can use the method as long as you can model and replicate the selection process itself, which is typically much simpler.

This strategy is likely to have widespread usefulness in surveys in which it is hard to obtain valid selection probabilities and, hence, weights (at least, it’s hard to do without breaking the bank!). For example, the monitoring and evaluation of OVC programs often is conducted from formal lists of beneficiaries maintained by the program that —as we’ve seen—can require all sorts of selection approaches that then complicate simple formulaic modelling of selection probabilities.

Advances in technology are making it possible to learn from surveys in ways that were not feasible until now. At MEASURE Evaluation, we strive to be a technical leader—and that includes harnessing the raw computing power at our fingertips to advance the global public health learning agenda.

For more information

Peter Lance and Aiko Hattori are co-authors of Sampling and Evaluation – A Guide to Sampling for Program Impact Evaluation. (2016). Chapel Hill, NC: MEASURE Evaluation, Carolina Population Center, University of North Carolina at Chapel Hill.